Data-driven Vulnerability Management

The Volume & Vulnerability Prioritization Challenge

Cyber security teams stand no chance of catching up with vulnerability remediation given the incoming volume. Cyber security burn out is real and will only get worse. Sure, AI will (at some point down the line) help address the sheer volume of vulnerabilities but backlogs still remain.

And the more “shifting left” we do in security, the more the backlog increases - shifting more tools left means increasing more raw vulnerability count and sadly, finding vulnerabilities earlier doesn’t really mean fixing them earlier - contrary to what we envisioned. But more than the number of vulnerabilities, what’s more pressing is the inability of doing proper prioritization, often leading to security teams pilling on the pressure on engineering teams to “fix all teh things or else”…..

As you see above, the number of vulnerability counts has been up and to the right for years and by all indications will continue to; yet only roughly 6% of all published CVEs have been observed as exploited in the wild, with that 6% representing nearly 15k exploited vulnerabilities.

Critical: 1,773 CVEs (CVSS 9 10)

High: 6,521 CVEs (CVSS 7.0 8.9)

Medium: 10,607 CVEs

Low/Unrated: 2,600 CVEs

Most organizations still lean on the Common Vulnerability Scoring System (CVSS) to gauge severity and decide what to patch first. CVSS provides a standardized 0–10 severity score based on inherent technical factors: how easily a flaw can be exploited (attack vector, complexity, etc.) and its impact.

All security tools standardise on CVSS, and rightly so. When it comes to a standard measure of severity and impact, CVSS shines. But it has far often been used in ways which make it ineffective to prioritize and address material risks.

CVSS’s Key Limitations a.k.a CVSS is not a risk or prioritization measure

Context Gap: CVSS reflects a vuln’s worst-case impact and ease of exploit in a vacuum. A flaw could score 9.8 (critical) yet never be touched by attackers, or score 5.0 (medium) but be a favored target in real campaigns. CVSS alone doesn’t give a full risk picture

Static Snapshot: CVSS scores are set at disclosure and typically don’t update; they are static and don’t adapt to the changing threat landscape.

Chasing tails: By over-emphasizing theoretical severity, teams often chase high-CVSS vulns that pose little actual risk, while overlooking moderate-CVSS issues that attackers actively exploit. The result is alert fatigue and potential burn out for both security folk and engineering folk.

In practice, this means many “critical” CVEs never get weaponized, whereas some lower-severity bugs turn into the go-to entry points for attackers. Teams that rely on CVSS alone may patch a lot of scary-looking flaws yet still get burned by an unpatched “medium” that hackers actually used.

A typical organization fixes around 10% of disclosed vulnerabilities in any give month……if you consider the CVE disclosure chart above that leaves quiet a significant gap to fill; But even worse is this lack of proper prioritization leads to burn out, alert fatigue and misuse of already limited capacity for most normal Orgs.

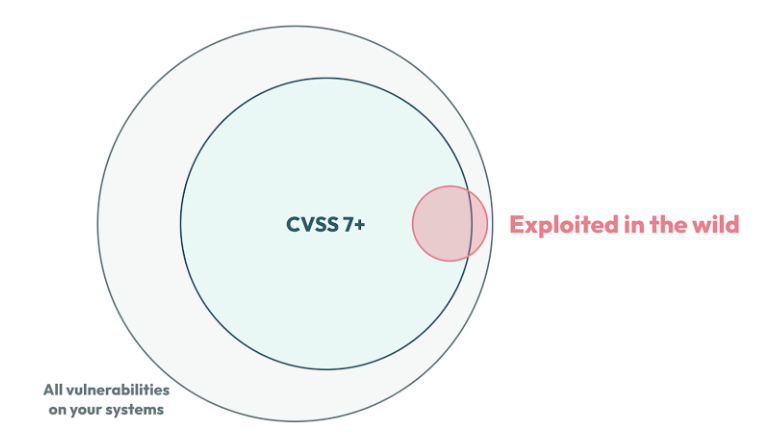

Illustrative example: Data from late 2023 shows that among all vulnerabilities with CVSS score ≥7 (green circle), only a small subset were observed exploited in the ensuing 30 days (red portion) This highlights the gap between high severity on paper and true risk in the wild.

CVSS is great and it has done wonders for the industry, but what has gotten us this far may not be all we need for going further.

Cyber security as an industry has/is maturing and with that, new models, processes and frameworks need to be adopted if we are to truly address our backlogs and focus on real risks.

EPSS – Adding probability to help with prioritization

The Exploit Prediction Scoring System (EPSS), governed by FIRST, introduces a probabilistic, data-driven view of risk. Instead of severity, EPSS estimates the likelihood that a vulnerability will be exploited in the next 30 days, outputting a probability from 0 to 1 (0–100%). A higher EPSS means “attackers are statistically more likely to target that CVE soon”. This forward-looking approach injects real-world context that CVSS lacks.

If CVSS is a static snapshot of severity, EPSS is a dynamic probability indicator of exploitation.

Behind the scenes, it crunches a wide range of signals using machine learning. Notably, EPSS draws on:

Vulnerability metadata: Basic CVE info including the CVSS vector (e.g. required privileges, user interaction) and weakness type (CWE).

Public exploit code: Indicators that exploit proofs-of-concept exist (e.g. references in Exploit-DB or Metasploit). A posted exploit is a strong hint attackers could use the vuln.

Threat intelligence feeds: Evidence of real-world exploitation gleaned from security monitoring – intrusion detection systems, honeypots, malware telemetry, “in the wild” exploit reports, etc. These data sources tell EPSS which CVEs are actively being used in attacks (or share traits with those that are).

Temporal factors: How long since disclosure, how long since a patch or PoC was released, and other time-based features that affect exploit trends For example, a bug that’s been public (unpatched) for 6 months might attract more opportunistic exploits over time.

EPSS’s algorithm (currently on version 4 as of 2025) uses these inputs to train a predictive model (e.g. gradient-boosted trees) that outputs a probability score. The model is continuously retrained and updated (typically daily or monthly) as new CVEs and attack data come in.

This means EPSS scores can evolve as threat activity shifts – a major advantage over one-and-done CVSS ratings.

By design, EPSS fills the “threat likelihood” gap left by CVSS. Whereas CVSS tells us how bad it could be if exploited, EPSS estimates how likely it is to be exploited in the near term i.e. 30 days.

This enables dynamic risk-based prioritization in that defenders can focus on the few vulnerabilities that are most likely to turn into potential incidents. In fact, the vast majority of CVEs get very low EPSS scores (reflecting that most won’t be attacked soon).

A vuln with CVSS 8.0 but EPSS 0.1% might be de-prioritized (if it’s unlikely to be exploited soon, it will still be fixed, but just not yet), whereas a CVSS 6.5 with EPSS 50% should probably jump to the front of the queue. This helps avoid wasting capacity on theoretical threats and directs effort to where it most reduces risk.

The sad part is that not all security tools use EPSS, while all use CVSS. So the very tools we use in security don’t always give us the true picture of the risks we ought to address. But given EPSS was only effectively launched in 2019, it’s kinda understandable why most security tools don’t include this scoring.

But you can query the daily updated EPSS API for these scorings to add additional context in your prioritization.

NOTE: EPSS includes both the score of that particular vulnerability as well as context of where that particular vulnerability/CVE sits in the broader vulnerability ecosystem.

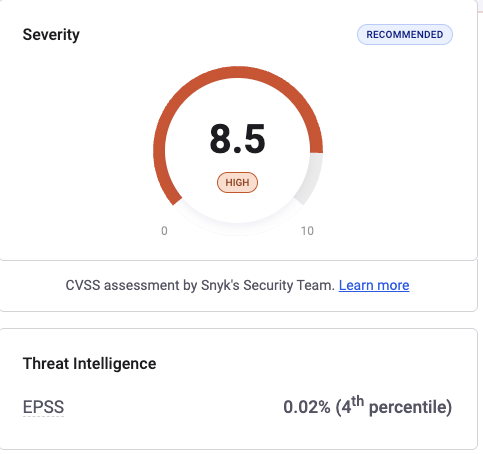

example below:

CVSS 8.5 (high vulnerability which should mean it’s scary, which should mean breath down the necks of your engineers to “fix it now”)

But EPSS is 0.02% (very low probability of being exploited within the next 30 days) AND the EPSS percentile is 4%, which means 96% of other vulnerabilities are more likely to be exploited than this particular one.

Example of EPSS context - courtesy of Snyk

So just by incorporating EPSS, we went from a “fix this HIGH severity issue NOW within a week as per our internal policy” to “let’s rather focus our already limited capacity on vulns which have a higher probability of being exploited this month”. Ofcoz the challenge still remains with us (security folk) having to report our compliance status and all the other checkbox exercises we all know we must do.

Most cyber security professionals and leaders started their careers only knowing CVSS and most tools were written during that era, so it will take time and will be an educative process when one starts surfacing CVSS and EPSS in our reporting and dashboards, but to help with prioritization and grasping actual risk, it’s a battle worth fighting.

But, EPSS alone is not enough just as CVSS alone is not enough; EPSS alone is NOT 100%. infact there are a few publications i read which attest to this but it’s the best of what we got for now (in version 4)

CISA KEV – Confirmed Attacks in the Wild (Reactive but Reliable)

If EPSS is forward-looking and probabilistic, CISA’s Known Exploited Vulnerabilities (KEV) catalog is the ground-truth reality check. The KEV catalog, maintained by the U.S. Cybersecurity & Infrastructure Security Agency, is essentially a “Most Wanted” list of CVEs that we know for a fact have been, or continue to be, exploited in actual attacks.

To appear on KEV, a vulnerability must have credible evidence of exploitation (from incident reports, threat intel, vendor advisories, etc.), and it typically relates to widely used software or critical infrastructure. In other words, KEV is a curated subset of CVEs that have crossed the line from theoretical to real-world threat.

Key features of KEV:

Definitive proof: A CVE gets added to KEV only when there is verified evidence of exploitation in the wild. This could be a malware campaign using the vuln, a documented breach, a public exploit actively weaponized, etc. No hypotheticals here – if it’s on KEV, someone malicious has used it. This makes KEV entries high-confidence red flags for defenders.

Binary, not graded: KEV is simply a list. A vuln is either “on the list” (exploited) or not. There’s no score or probability – it’s a yes/no flag that tells you exploitation has occurred.

Retrospective & reactive: By nature, KEV is after the fact. It documents past exploitation, so it lags behind emerging threats; it’s meant for catching up and responding to known exploited bugs NOT zero days or even nDays.

KEV-EAT (“Caveat” for KEV - sorry dad joke): As of late 2024, CISA’s KEV catalog contained only ~1,228 vulnerabilities out of 260,000+ CVEs tracked – that’s <0.5% of all known vulns. Even accounting for scope (KEV focuses on significant vulns that affect government and critical systems), it’s clear that KEV is not comprehensive. Plenty of exploited CVEs never make it onto CISA’s list, especially if they’re exploited in more regional or targeted contexts, or not deemed a threat to U.S. interests. It’s not great, but it’s something.

A Unified Approach to Risk-Based Vulnerability Management

To summarize the evolution of vulnerability prioritization:

CVSS (2005)– “How bad could it be?” (Technical severity on a theoretical basis, 0–10)

EPSS (2019) – “How likely is it to be attacked soon?” (Probabilistic exploit forecast within 30 days)

CISA KEV (2021) – “Has it already been seen in attacks?” (Yes/No confirmation of in-the-wild exploitation)

Each of these has a role. CVSS remains useful as a baseline for impact – and it’s ubiquitous and simple, though coarse. EPSS adds the urgent dimension of time-bound likelihood, helping teams filter the noise of “thousands of vulns” down to the few that attackers are probable to hit next. KEV cuts through any model uncertainty with hard evidence – patch these now or attackers will continue to exploit them.

In practice, a mature vulnerability management program will use these in combination. For example:

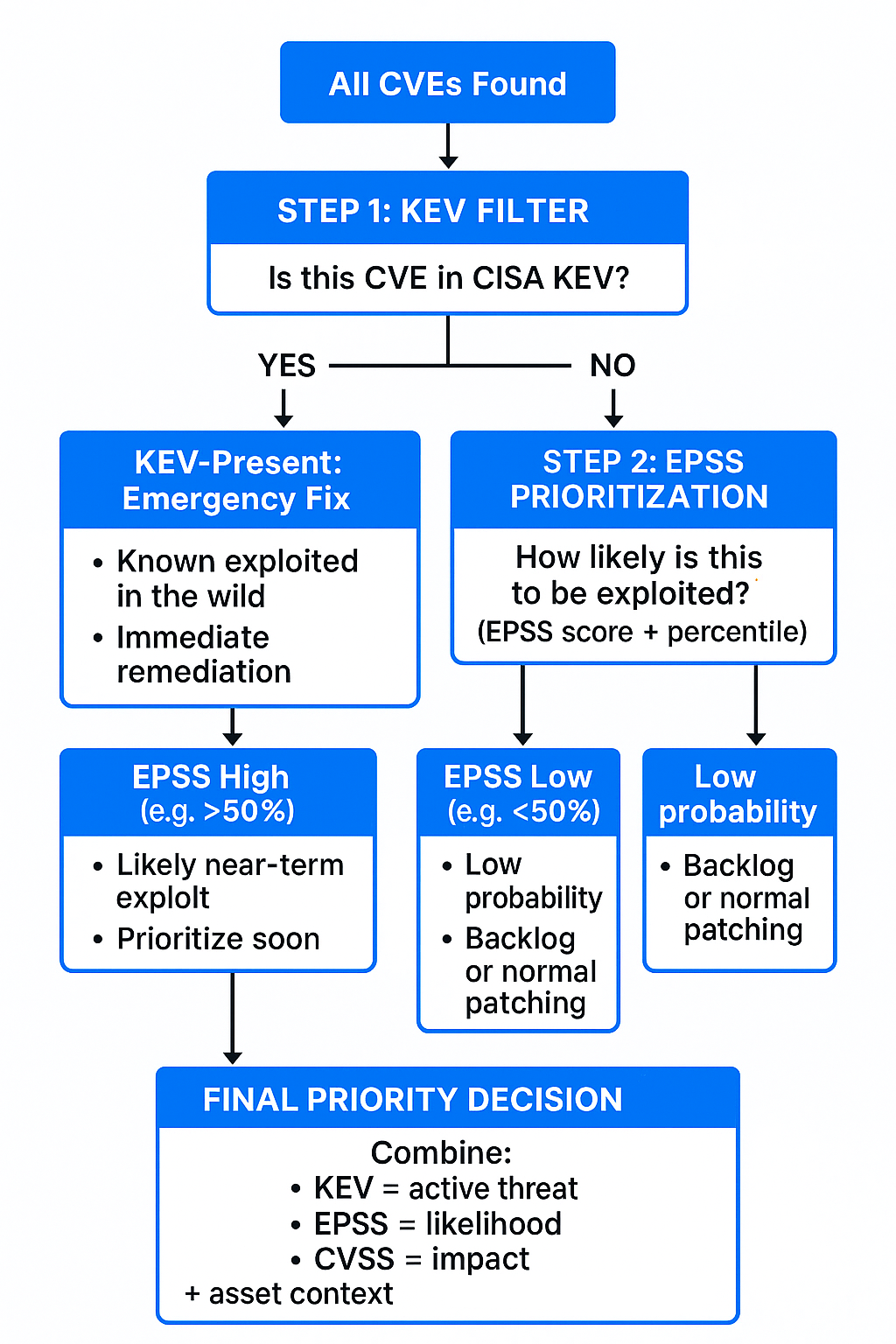

prioritization example using KEV, CVSS and EPSS filtering

Use KEV as a first-pass filter for emergency remediation and prioritization – any KEV-listed vulnerability on an asset you have should be treated as an active threat (since adversaries are known to exploit it). These are your “drop everything and fix” items, often aligned with external mandates

Use EPSS to prioritize among the remaining non-KEV vulnerabilities. Those with high EPSS and percentile (e.g. >50% probability) are strong candidates for near-term exploitation They might not be exploited yet, but the data says they are likely soon – so address them before they become the next KEV entry.

Keep CVSS in the mix for context – especially to weigh the potential impact if a vuln is exploited. CVSS still tells you how bad a compromise could be. For two vulns with similar EPSS, the one with higher CVSS (e.g. remote code execution vs. simple DoS) likely warrants higher priority due to impact. And CVSS is often needed for compliance reporting and communication (everyone understands a “CVSS 9.0 Critical” versus “CVSS 4.0 Low”).

An integrated, risk-based strategy might have you patch all three sets: (1) anything on KEV (proven exploits), (2) anything above a certain EPSS/LEV threshold (high likelihood), and (3) anything with extreme CVSS scores that you simply cannot ignore (even if low EPSS for now). This layered approach ensures you’re covered across confirmed threats, probable near-future threats, and worst-case impact.

Conclusion: Evolving Toward Data-Driven Vulnerability Management

CVSS was a great start for its time (2005) – it standardized how we talk about vulnerability severity. But in today’s threat landscape, severity alone doesn’t equal risk or prioritization. We’ve seen real attacks pivot to whatever gets results, even if it’s a “medium” on paper. The emergence of community-driven, open metrics like EPSS, KEV marks a pivotal shift from a one-dimensional view of vulns to a multi-dimensional risk perspective.

By incorporating confirmed real-world exploitation data (KEV), probabilistic modeling (EPSS) and severity of impact (CVSS), security teams can prioritize smarter and faster.

Remaining challenges

Security tooling need to incorporate these additional scorings for true context; however I admit it’s a challenge given KEV and EPSS are not static which means security tooling will need to probe daily.

Education, both internally to our security leaders colleagues and compliance folk. CVSS has been the benchmark for what seems like forever, and EPSS (2019) and KEV (2021) are still new and not everyone is even aware of them.

Update 30 Nov 2025:

A recently published study—Vulnerability Management Chaining: An Integrated Framework for Efficient Cybersecurity Risk Prioritization—arrives at similar conclusions using large-scale empirical evidence.

Analysing 28,377 real-world CVEs, the authors demonstrate:

• 14–18× efficiency improvements,

• 85%+ coverage of actually exploited vulnerabilities,

• and a ~95% reduction in urgent remediation workload

when combining KEV, EPSS and CVSS in a structured prioritisation flow.

Their results provide strong quantitative validation for the layered, data-driven approach described in this article.